Estimation des mouvements sismiques et de leur variabilité par approche neuronale

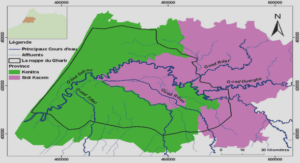

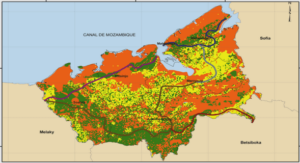

Présentation de la base de données RESORCE : étude de premier ordre

La collaboration et les interactions entre le laboratoire RISAM (RIsk ASsessment And Management) de Tlemcen et l’Université Dr Moualy Tahar Saida-Algérie et l’Institut des Sciences de la Terre (ISTerre) Grenoble-France, nous a permis de lancer un travail de recherche sur les GMPE neuronales en utilisant la base de données euro-méditerranéenne et de MoyenOrient (figure I.6 et I.7). Cette base de données, déjà évoquée dans le chapitre I, contient 5115 enregistrements et 1721 événements sismiques comme le montre le tableau II.1. Des critères de sélection sont adoptés afin d’écarter les données qui ne représentent pas à la fois des informations sur les effets de Développement d’une GMPE à effets-aléatoire dirigée par les données RESORCE source, de propagation d’onde et de site. Les séismes sélectionnés sont ceux où la profondeur focale ≤ 25 km (séismes crustaux), la magnitude du moment ≥ 3.5 (séismes ressentis). Les séismes dont l’information sur le mécanisme au foyer est absente sont écartés. La distance Joyner et Boore (RJB) et la vitesse Vs30 mesurée représentent l’effet de propagation d’onde et de site respectivement. Il en résulte de ce tri 1088 enregistrements de 312 événements. La grande majorité des données sélectionnées sont d’origine italienne, 76 % en failles normales et de provenance de la Turquie 57 % en failles décrochantes (Strike-slip) figure.II.10. En outre, plus 2/3 des données sont turques et la majorité de ces données sont enregistrées en champs lointains. Inversement, la plupart des données italiennes se trouvent en champs proches (figure II.6). Les paramètres de sélection représentent les variables explicatives du modèle neuronal sont la magnitude du moment (Mw), la distance Joyner et Boore (Rjb), la vitesse moyenne des ondes de cisaillement sur trente mètres de profondeur Vs30, la profondeur focale (Depth) et le mécanisme au foyer FM. RJB est la plus courte distance horizontale entre le site d’enregistrement et la projection verticale de la rupture comme le représente la figure II.7. Dans Derras et al. (2014) nous avons essayé d’élaborer une GMPE en utilisant le modèle à effets mixtes. Dans la section suivante, nous présentons cette procédure en utilisant la base de données RESORCE.

The ANN model

Design

The design of the ANN model requires several choices concerning the input parameters which are relevant for the outputs, the number of hidden layers and the corresponding number of neurons, and the selection of the functional form for the activation functions. We first have chosen the independent parameters of our predictive models (inputs of the neural model). Following classical seismological studies we have considered that ground-motions are depending on magnitude and a distance metric. The hypocentral depth and style-of-faulting were added in order to test their impact on ground-motion properties. The style-of-faulting parameter corresponds to a division in three classes: normal faulting, reverse faulting and strikeslip faulting. Site effects are classically taken into account through the average shear velocity in the top 30 meters. Despite the limitations of this proxy outlined by various authors (e.g., Mucciarelli and Gallipoli 2006; Castellaro et al. 2008; Assimaki et al. 2008; Kokusho and Sato 2008; Lee and Trifunac 2010; Cadet et al. 2012), it is presently the only one available for the RESORCE database. The input layer thus contains 5 input parameters, i.e., five input neurons, one for each input parameter (log10(RJB), Mw, log10(Vs30), Depth and style-of faulting class). The output of the ANN are very classically log10(PGV), log10(PGA), and log10(PSA) at 62 period values from 0.01 to 4 sec: the output layer thus contains 64 neurons. We did not include any other parameter such as PGD or severity index as CAV, Arias intensity, etc. in order to be consistent with the other papers of this special volume. In between, a single hidden layer has been finally selected. Two or more hidden layers would have significantly increased the complexity of the model and the number of degrees of freedom, raising the issue of an « over-determination », while the would have shown only a marginal decrease. In addition, an ANN model with one single hidden layer has been shown to be a universal approximator of a continuous function representing the physical phenomenon (Maass, 1997; Auer et al. 2008). This single hidden layer consists of five hidden neurons which is the optimal hidden neurons number (Derras et al. 2012) in order to both optimize the total and the Akaike Information Criterion (AIC). The final ANN model was selected after several tests: In particular, several activation functions (between input and hidden layers, and between hidden and output layers) have been considered as listed in Table.II.2. The lowest σ value, and the lowest number of iterations as well, correspond to a hyperbolic tangent function for the former, and a linear one for the latter, which Développement d’une GMPE à effets-aléatoire dirigée par les données RESORCE Chapitre II 75 was kept for the final model and for other sensitivity studies. The effectiveness of an ANN model to simulate highly nonlinear problems is attributed to this nonlinear activation function. Table.II.2 Influence of different activation functions on and on kk: number of iteration which gives the maximum likelihood in the random effect algorithm Activation function of hidden layer Activation function of the output layer kk Total sigma () The “random-effects” algorithm was actually found to provide the fastest convergence, and the lowest σ values. The way to implement these input parameters has been chosen after several tests. The evolution of the standard deviations σ obtained with different combinations of the input parameters is displayed in Table.II.3. This Table shows that the simultaneous use of the 5 parameters leads to the lowest σ, but that some parameters contribute more than others to this reduction. Despite the relatively limited gains in σ reduction obtained by the consideration of FM and depth parameters, they were finally considered in the present study in order to offer comparison with results of classical GMPEs. Five parameters, namely log10(RJB), Mw, log10(Vs30), Depth and FM, are thus used as inputs to the neural model. All the tests and the final implementation have been performed with the Matlab® Neural Network Toolbox™ (Demuth et al. 2009). According to the traditional approach in the derivation of GMPE, the whole data set has been used to constrain the model, which means that the sample number for the training is the total number of available recordings and thus equal to 1088. Développement d’une GMPE à effets-aléatoire dirigée par les données RESORCE Chapitre II 76 Table.II.3 Evolution of the overall fit of the ANN model (as measured with total ) for PGA, PGV, PSA at 0.2 sec and PSA at 2 sec, according to the different sets of input parameters.

ANN model formulations

The PSA ANN model developed in this study can be implemented in simple analytical tools, providing the median log10 of ground motion parameters (PGV, PSA an PSA [0.01 to 4 sec]) through the equation:) in which: The {P} vectors the input parameter vector (5 values). The {Pmin}, {Pmax}, {Tmin}, {Tmax} vectors allow to normalize the input and output parameters within their actual range. Their values are listed in Table.II.4 and Table.II.5, respectively. The [Wl] matrix represents the synaptic weights, while the {bl} vectors represent the bias. [W1] groups the synaptic weights between the input parameters and the hidden layer, while [W2] contains the synaptic weights between the hidden layer and the output layer. {b1} and {b2} are the bias vectors of the hidden and output layers, respectively. Their values are listed in Table.II.6. The Tanh dependency is related to the selection of the Tanh-sigmoid activation function for the hidden layer. Table.II.4 .

INTRODUCTION GENERALE |