Cours Calculating Expected Values, tutoriel & guide de travaux pratiques en pdf.

Examining Observed Cumulative Counts

Before you start building any model, you should examine the data. Figure 4-1 is a cumulative percentage plot of the ratings, with separate curves for those whose households experienced crime and those who didn’t. The lines for those who experienced crime are above the lines for those who didn’t. Figure 4-1 also helps you visualize the ordinal regression model. It models a function of those two curves.

Consider the rating Poor. A larger percentage of crime victims than non-victims chose this response. (Because it is the first response, the cumulative percentage is just the observed percentage for the response.) As additional percentages are added (the cumulative percentage for Only fair is the sum of Poor and Only fair), the cumulative percentages for the crime victim households remain larger than for those without crime. It’s only at the end, when both groups must reach 100%, that they must join. Because the victims assign lower scores, you expect to see a negative coefficient for the predictor variable, hhcrime (household crime experience).

Specifying the Analysis

To fit the cumulative logit model, open the file vermontcrime.sav and from the menus choose:

- Analyze

- Regression

- Ordinal…

- A Dependent: rating

- A Factors: hhcrime

- Options…

- Link: Logit

- Display

- Goodness of fit statistics

- Summary statistics

- Parameter estimates

- Cell information

- Test of Parallel Lines

- Saved Variables

- Estimated response probabilities

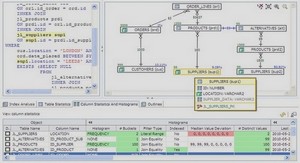

Parameter Estimates

Figure 4-2 contains the estimated coefficients for the model. The estimates labeled Threshold are the αj ’s, the intercept equivalent terms. The estimates labeled Location are the ones you’re interested in. They are the coefficients for the predictor variables. The coefficient for hhcrime (coded 1 = yes, 2 = no), the independent variable in the model, is –0.633. As is always the case with categorical predictors in models with intercepts, the number of coefficients displayed is one less than the number of categories of the variable. In this case, the coefficient is for the value of 1. Category 2 is the reference category and has a coefficient of 0.

The coefficient for those whose household experienced crime in the past three years is negative, as you expected from Figure 4-1. That means it’s associated with poorer scores on the rankings of judges. If you calculate e–β , that’s the ratio of the odds for lower to higher scores for those experiencing crime and those not experiencing crime. In this example, exp(0.633) = 1.88. This ratio stays the same over all of the ratings.

The Wald statistic is the square of the ratio of the coefficient to its standard error. Based on the small observed significance level, you can reject the null hypothesis that it is zero. There appears to be a relationship between household crime and ratings of judges. For any rating level, people who experience crime score judges lower than those who don’t experience crime.

Testing Parallel Lines

When you fit an ordinal regression you assume that the relationships between the independent variables and the logits are the same for all the logits. That means that the results are a set of parallel lines or planes—one for each category of the outcome variable. You can check this assumption by allowing the coefficients to vary, estimating them, and then testing whether they are all equal.

The result of the test of parallelism is in Figure 4-3. The row labeled Null Hypothesis contains –2 log-likelihood for the constrained model, the model that assumes the lines are parallel. The row labeled General is for the model with separate lines or planes. You want to know whether the general model results in a sizeable improvement in fit from the null hypothesis model.

The entry labeled Chi-Square is the difference between the two –2 log-likelihood values. If the lines or planes are parallel, the observed significance level for the change should be large, since the general model doesn’t improve the fit very much. The parallel model is adequate. You don’t want to reject the null hypothesis that the lines are parallel. From Figure 4-3, you see that the assumption is plausible for this problem. If you do reject the null hypothesis, it is possible that the link function selected is incorrect for the data or that the relationships between the independent variables and logits are not the same for all logits.

A standard statistical maneuver for testing whether a model fits is to compare observed and expected values. That is what’s done here as well.

Calculating Expected Values

You can use the coefficients in Figure 4-2 to calculate cumulative predicted probabilities from the logistic model for each case:

–( α – β x)

prob(event j) = 1 / (1 + e j )

Remember that the events in an ordinal logistic model are not individual scores but cumulative scores. First, calculate the predicted probabilities for those who didn’t experience household crime. That means that β is 0, and all you have to worry about are the intercept terms.

From the estimated cumulative probabilities, you can easily calculate the estimated probabilities of the individual scores for those whose households did not experience crime. You calculate the probabilities for the individual scores by subtraction, using the formula:

prob(score = j) = prob(score less than or equal to j) – prob(score less than j).

The probability for score 1 doesn’t require any modifications. For the remaining scores, you calculate the differences between cumulative probabilities:

prob(score = 2) = prob(score = 1 or 2) – prob(score = 1) = 0.3376 prob(score = 3) = prob(score 1, 2, 3) – prob(score 1, 2) = 0.5088 prob(score = 4) = 1 – prob(score 1, 2, 3) = 0.0698

You calculate the probabilities for those whose households experienced crime in the same way. The only difference is that you have to include the value of β in the equation. That is, prob(score = 1) = 1 / (1 + e( 2.392 – 0.633) ) = 0.1469 prob(score = 1 or 2 ) = 1 / (1 + e( 0.317 – 0.633) ) = 0.5783 prob(score = 1, 2, or 3) = 1 / (1 + e( – 2.593 – 0.633) ) = 0.9618 prob(score = 1, 2, 3, or 4) = 1

Of course, you don’t have to do any of the actual calculations, since SPSS will do them for you. In the Options dialog box, you can ask that the predicted probabilities for each score be saved.

Figure 4-4 gives the predicted probabilities for each cell. The output is from the Means procedure with the saved predicted probabilities (EST1_1, EST2_1, EST3_1, and EST4_1) as the dependent variables and hhcrime as the factor variable. All cases with the same value of hhcrime have the same predicted probabilities for all of the response categories. That’s why the standard deviation in each cell is 0. For each rating, the estimated probabilities for everybody combined are the same as the observed marginals for the rating variable.