With the ubiquity of Cloud Computing technologies over the last decade, Cloud providers and researchers have strived to design tools for evaluating and enhancing different Quality of Service (QoS) aspects of their systems, mainly performance, availability and reliability. Failing to comply to such aspects can compromise service availability and incur Service Level Agreement (SLA) violations, and hence impose penalties on cloud providers. The development of system management policies that support QoS is therefore crucial. However, the latter is a challenging task, as it must rely on evaluation tools which are able to accurately represent the behavior of multiple attributes (e.g., CPU, RAM, network traffic) of Cloud systems. It is also complicated by the essence of Cloud systems, built on heterogeneous physical infrastructure and experiencing varying demand; these systems have different physical resources and network configurations and different software stacks. Further, the reproduction of conditions under which the system management policies are evaluated, and the control of evaluation conditions are challenging tasks.

In this context, workload modeling facilitates performance evaluation and simulation as a “black box” system. Workload models allow Cloud providers to evaluate and simulate resource management policies aiming to enhance their system QoS before their deployment in full-scale production environments. As for researchers, it provides a controlled input, allowing workload adjustments to fit particular situations, repetition of evaluation conditions and inclusion of additional features. Furthermore, workload simulation based on realistic scenarios enables the generation of tracelogs, scarce in Cloud environments because of business and confidentiality concerns. Workload modeling and workload generation are challenging, especially in Cloud systems, due to multiple factors: (i) workloads are composed of various tasks and events submitted at any time, (ii) heterogeneous hardware in a Cloud infrastructure impacts task execution time and arrival time and (iii) the virtualization layer of the Cloud infrastructure incurs overhead due to additional I/O processing and communications with the Virtual Machine Monitor (VMM). These factors make it difficult to design workload models and workload generators fitting different workload types and attributes. In the current state of the art, effort is instead deployed to design specialized workload modeling techniques focusing mainly on specific user profiles, application types or workload attributes.

To tackle the above issues, we propose in this article a hybrid workload modeling and optimization approach to accurately estimate any attribute of workload, from any domain. The objective is to develop realistic CPU workload profiles for different virtualized telecom and IT systems, based on data obtained from real systems. Our proposition consists first of modeling workload data sets by using different Hull-White modeling processes, and then determining an optimal estimated workload solution based on a custom Genetic Algorithm (GA). We also propose a combination of Kalman filter (Hu, Jiang & Wang,2014) and support vector regression (SVR) (Cortes & Vapnik, 1995) to estimate workloads. Our IMS workload data sets include three variations of the same load profile, where CPU workload is generated by stressing a virtualized IMS environment with varying amounts of calls per second, thus producing sharp increases and decreases in CPU workload over long periods of time. Our IT workload types take two different approaches. The Google CPU workload under evaluation, for example, is composed of sharp spikes of CPU workload variations over short periods of time. By contrast, another CPU workload, namely Web App, is characterized by periodicity trends. These datasets therefore display unique trends and behavior that provide interesting scenarios to evaluate the efficiency of the workload modeling and workload generation techniques that we are proposing in this paper. The evaluation of the mean absolute percentage error (MAPE) of the best estimated data provided by our HullWhite based approach against the observed data, shows significant improvement in the accuracy level compared to other workload modeling approaches such as standard SVR and the SVR with a Kalman filter.

Related Work

In this section, we review existing works relevant to workload modeling to set the perspective for our contributions. The present review emphasizes on, but is not limited to, virtualized Cloud environments such as Google real traces and Open IMS datasets. This overview aims to give a broader picture of general types of workloads and workload modeling techniques that have been previously addressed by the research community

Workload Domains

Workload domain characterization is the first step and the uppermost level that should be considered before planning performance evaluation. It has a major impact upon what type of workload is to be considered. Domains vary in shape and scope, depending on the size and purpose of the environment; one can go from the performance evaluation of a single application on a workstation, to a full-scale performance evaluation of a multi-tenant, heterogeneous cloud environment (e.g., Amazon EC2). Google clusters and virtualized IP Multimedia Subsystem (IMS) cloud environments, both subjects of this work, are considered as workload domains belonging to the field of Cloud computing. Different relevant works have been cited in this context.

Moreno et al. (Moreno, Garraghan, Townend & Xu, 2013) provide a reusable approach for characterizing Cloud workloads through large-scale analysis of real- world Cloud data and trace logs from Google clusters. In this work, we address the same workload domain. There are, however, differences in their approach, starting with the dynamic behavior of their workload, in contrast with the static behavior of ours. The difference between a static and dynamic workload behavior is discussed next. MapReduce and Hadoop performance optimization (Moreno et al., 2013), (Yang, Luan & Li, 2012), (An, Zhou, Liu & Geihs, 2016) also bring interesting avenues because of the nature of the data intensive computing taking place in such environments, and because this framework is at the core of most of the leading tech company datacenters in the world, like Google, Yahoo and Facebook. This type of optimization, on the other hand, focuses on long-term analysis of predictable workloads and scheduled tasks, rather than quick bursts in demand for a specific application in a nonpredictable manner. Performance evaluation of web and cloud applications (An et al., 2016), (Bahga & Madisetti, 2011), (Magalhaes, Calheiros, Buyya & Gomes, 2015) is another popular domain worthy of consideration. This domain usually involves the evaluation of user behavior, among other things, which is less prevalent in other domains. However, this domain is typically application-centric and rarely considers the workload characteristics of the whole cloud environment.

Workload Types

The next step when planning performance evaluation is to define what workload types to investigate. Feitelson (Feitelson, 2014) gives a good description of the constitution of a workload type. For instance, the basic level of detail of a workload is considered an instruction, many of which compose a process or a task. A set of processes or tasks can in turn be initiated by a job sent by a user, an application, the operating system, or a mix thereof.

In the field of cloud computing, the purpose of performance evaluation is mainly to optimize hardware resource utilization. There is therefore little to no variation in workload types, aside from minor differences in philosophy or context from the researchers. Workloads typically originate from a mix of user, application and task workload types. For instance, the workloads studied by Magalhaes et al. (Magalhaes et al., 2015) and Bahga et al. (Bahga et al., 2011) are spawned from the behavioral patterns of specific user profiles using select web applications. A similar work by Moreno et al. (Moreno et al., 2013) uses a similar approach but focuses on the workload processed within a cloud datacenter driven by users and tasks. Studying these workload types can be useful in evaluating our own datasets, since the latter also depend on user behavior. In fact, user behavior is the prime factor in workload generation for our performance evaluations. Another approach proposed by An et al. (An et al., 2016) consists of viewing the user, the application and the service workload types as three layers of granularity. The users launch a varying amount of application-layer jobs, which in turn execute a varying number of service-layer tasks. This method is effective in the context of predictable, dynamic workloads. In some cases, only one workload type will be investigated, such as parallel processes and jobs under a specific cloud environment (Yang et al., 2012). Such work is intended to acquire very specific benchmarks from selected applications like MapReduce, and to evaluate optimal hardware configuration parameters in a cluster.

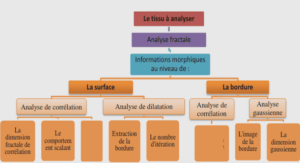

Workload Attributes

Workload attributes are the last step to consider when planning the performance evaluation of a system. Attributes are what characterize workload types, and directly influence hardware resources of the system. It is therefore essential to have a clear idea of how each instruction interacts with one or more resources from the system if you want to correctly interpret the workload data. For instance, I/O (disk and memory usage, network communications, etc.) attributes include the distribution of I/O sizes, patterns of file access and the use of read and/or write operations (Feitelson, 2014).

In this work, only the scheduling of the CPU is of interest. Hence, the relevant attributes are each job’s arrival and running times (Feitelson, 2014). CPU scheduling is a common interest in performance evaluation, and most studies analyze many workload attributes related to this resource. In An et al. work (An et al., 2016), the system CPU rate, threads, Java Virtual Machine (JVM) memory usage and system memory usage are analyzed. Similar attributes are considered in Magalhaes et al. work (Magalhaes et al., 2015), where the system CPU rate, memory rate and the users’ transactions per second are evaluated. Other works are much more thorough in their analysis (Moreno et al., 2013), where attributes are subdivided in user patterns (submission rate, CPU estimation, memory estimation) and task patterns (length, CPU utilization, Memory utilization). Putting aside the differences in workload domains such as IMS and Google performance evaluation, we can safely assume that the system CPU behavior remains the same in both cloud environments.

INTRODUCTION |