Apprentissage discriminant des modèles continus en traduction automatique

Statistical Machine Translation

This chapter provides a description of several approaches used in Statistical Machine Translation (SMT), and of the log-linear model into which continuous-space models (CSMs) will be incorporated. These discussions give the foundation, not only for the integration, but also for the training of CSMs that will be described in the next chapters. In the first section, we give a brief overview on several widely used SMT systems, in particular the phrase-based and n-gram-based approaches. Some methods used to evaluate the quality of SMT outputs are presented in Section 1.2. The final section formulates and describes the discriminative training of log-linear coefficients, along with the corresponding optimization algorithms. Following (Cherry and Foster, 2012), several discriminative objective functions used in these methods are reviewed (Section 1.3.1), which lay the ground for further developments.

Overview on Statistical Machine Translation

Translation from a source sentence s to a target sentence t is a complex process usually modelled by dividing the whole derivation into smaller sub-processes, such as the segmentation of the source text s into smaller fragments, the translation of each individual fragment into target words, and their final recombination. Each small sub-process can be modelled independently and is learnt using the statistical analysis of large corpora, while the interaction between different models is also learnt. Early work on SMT considers the translation as a generative process. The modelling aims at estimating the probability of the target sentence given its corresponding source, and involves probabilistic distributions at the word level. The first mathematically solid approach to the modelling of the translation process is fully described in (Brown et al., 5 1993), which considers translation as a search for the most likely target sentence t ∗ : t ∗ = argmax t p(t|s) The solution of (Brown et al., 1993), known in the literature as the noisy channel model (or source channel model), is to apply the Bayes rules to obtain the following decomposition : t ∗ = argmax t p(t|s) = argmax t p(s, t) = argmax t p(s|t) × p(t) (1.1) This formulation divides the generation of the couple (s, t) in two terms : the choice of a target sentence t according to a language model (probability p(t)), and the fitting of this sentence compared to source text s according to a transmission channel modelled by p(s|t), also referred to as the translation model. It implies the division of modelling in two subtasks which can be addressed independently in terms of estimation techniques and training data : the language modelling and the translation modelling. The translation model is estimated on a set of sentence pairs (or parallel training data), each of which contains a sentence in the source language and its translation in the target language. However, these texts present only surface sentence-level relationship without any explicit indication about which decisions have been taken to derive the target sentence from the source. The learning of hidden relationships inside a sentence pair becomes possible only with the introduction of latent variables a describing the alignment between the components of the source and target sentences. The definition of these latent variables characterizes different approaches in Machine Translation, among which the phrase-based approach (Section 1.1.2) which models the translation based on phrases instead of words, is today one of the state-of-the-art approaches. The noisy channel model however presents some limitations. First, the decision in the noisy channel model (1.1) can be shown to be optimal only when true probability distributions are used. In practice, distributions are estimated from training data using some simplifying assumptions. These make the models only poor approximations of the assumed data distribution; on the other hand the use of these assumptions is necessary to make statistical estimation tractable. Second, the noisy channel model makes the integration of complex forms of latent variables, as well as of different approaches for the same model, difficult. Later, since the work of (Och and Ney, 2002), the discriminative framework based on a log-linear model is often used. This framework models existing knowledge about the translation process (such as the likelihood of a target sentence or of a translation decision) in form of feature functions. In the general case, the probability of p(t, a|s) is inferred from the following formula : p(t, a|s) = 1 H(s) exp X M m=1 λmfm(s, t, a) ! (1.2) which contains a set of M feature functions reflecting different aspects of the translation decision. Here H(s) is a normalization constant ensuring all probabilities sum to 1. The 6 Overview on Statistical Machine Translation SMT system then selects the target sentence t (and a derivation a) which maximizes the above probability : 1 t ∗ = argmax t,a X M m=1 λmfm(s, t, a) (1.3) It is important to note that the noisy channel model (1.1) is a special case of this discriminative framework, where the set of features contains two functions : the first one reflects the compatibility between s and t, while the second one evaluates the fluency of t in the target language; these two are equally weighted with λ1 = λ2 = 1. In general, the set of feature functions may exploit various characteristics of the triplet (s, t, a) at different levels (word, phrase, or sentence), and is derived from the representation of latent variables (or derivation) a. In practice, the definition of latent variables, as well as the set of feature functions are two properties that characterize different approaches in Statistical Machine Translation. Once the model is defined, its parameters are estimated on training corpora, traditionally relying on a two-step process. In the first step, several probabilistic models, which give values to feature functions f M 1 , are estimated independently on very large monolingual or bilingual corpora. The second step learns the mixing weights of these models to be used in the linear combination (1.3) via the log-linear coefficients λ. The introduction of these parameters adds more flexibility, but also complexity. The optimization of λ forms the tuning task within the building of SMT systems. While the training of a standard maximum entropy model consists of maximizing the conditional likelihood, the training of log-linear coefficients within a SMT system often incorporates automatic evaluation metrics for MT (the most widely used is BLEU, see Section 2.5.2). A typical example is the Minimum Error Rate Training (MERT) (Och, 2003).

Word-based approach

The word-based approach is introduced in (Brown et al., 1993; Vogel et al., 1996; Tillmann et al., 1997) which propose to estimate the translation model (TM) p(s|t) in the noisy channel model by decomposing the translation at the level of words, and by introducing word-to-word alignments as latent variables : p(s|t) = X a p(s, a|t) = X a p(a|t) × p(s|a, t) (1.4) where a = a1, …, aJ denotes the word alignment variable. Each element aj for 1 ≤ j ≤ I takes value from {1, …, J}; here J and I denote the number of words in the source and target sentences. The first term p(a|t) is referred to as the distorsion model which characterizes the syntactic reordering of the source sentence, while p(s|a, t) provides a translation model given the reordering. The translation model defines the generative probability of s which can be obtained from word-level probabilities conditioning on aligned target 1Theoretically, t must be chosen to maximize its probability given s, which is estimated by marginalizing over the variable a in Equation (1.2). In practice, as the marginalization is intractable, we instead look for a pair (t, a) that maximizes the probability, and take the corresponding t as the output translation words (lexical translation model), while the alignment a can be made independent from t as proposed in (Vogel et al., 1996) : p(s, a|t) = p(a|t) × p(s|a, t) = Y J i=1 p(ai |ai−1) ! Y J i=1 p(si |tai ) ! (1.5) Other models propose different assumptions to make tractable the inference of latent variables a. For example, IBM models (3 & 4) estimate the distorsion model p(a|t) via the introduction of fertility that characterizes the number of source words a target word can align to. However, a common weakness of these models is the asymmetry of the alignment : a source word is aligned only to one target word, but a target word may align to multiple source words (many-to-one alignment). Moreover, most of them estimate p(s|a, t) by simplifying it to a lexical translation model, which is a consequence of the many-to-one alignment : each target word tai is generated depending only on the source word si it is aligned to. The model hence fails to capture information from target words other than the aligned word, or from a whole group of target words.

Phrase-based approach

The word-based approach has many shortcomings, such as the failing to capture dependencies outside the word alignment links, and to model complex reordering patterns. Moreover, longer dependencies are learnt only by the target language model which ignores the source text. The phrase-based approach aims at incorporating long contexts into the translation model by learning the translations for phrases. 2 The source sentence is first segmented into phrases, then each phrase is translated independently before they are recomposed to form the target sentence. However, compared to the word-based models, this kind of models introduces an additional level of complexity which is the phrase segmentation, while afterwards an optimal alignment is searched on phrases instead on words. To simplify the problem, the phrase segmentation is often performed using heuristic techniques during the training. The shift from word-based to phrase-based models is introduced in (Och et al., 1999) with the method of alignment templates. This approach introduces latent phrase alignment variables directly derived from the word alignments, and which are constructed using a two-phase process : first, word-level alignments are built, then phrase-level alignments are derived from the first ones using an heuristic. To overcome the many-to-one mapping implied by word alignment models, the heuristic, often referred to as symmetrization, constructs two sets of word alignments, each in one direction, from the source to target side, and inversely. Then the procedure merges them into a symmetric alignment matrix that contains many-to-many alignments. Finally, for each sentence pair, all phrase pairs which are consistent with the alignment matrix are extracted. As in the word-based approach, the authors also define several generative models derived from aligned source and target phrases, which correspond to a set of feature functions in the discriminative framework. 2A phrase is simply defined as a sequence of words whose length is not fixed beforehand, and should not be understood as having here its usual linguistic meaning. 8 Overview on Statistical Machine Translation During training, these features take into account both the probability of a phrase pair, as well as the probabilities of inner lexical alignment links inside each phrase pair. The last terms will be later generalized and named lexical weights in (Koehn et al., 2003). The alignment template method in (Och, 1999) considers bilingual word classes instead of surface forms as a first attempt to address data sparsity issues. Zens et al. (2002) introduce for the first time the term phrase-based machine translation which consists of a simplification of the alignment template approach where a distribution over bilingual phrases is estimated directly using their surface forms. On the level of sentences, a set of latent variables are introduced to describe the segmentation of a sentence pair into bilingual phrases. They also propose to deal with the reordering problem by the use of a reordering graph generated by a word-based model. The model described in (Koehn et al., 2003) is very similar, but introduces a simpler distance based reordering. These two last papers define the standard method for building a translation model from a parallel bilingual corpus. Some propositions in the literature aim at directly addressing the joint segmentation and alignment into phrase pairs; however they are often based on complex models that need approximate inference procedures, or imply some constraints to restrict the combinatorial search space. The reader is invited to get further details in (Marcu and Wong, 2002; DeNero et al., 2006, 2008; Zhang et al., 2008; Andrés-Ferrer and Juan, 2009; Bansal et al., 2011; Feng and Cohn, 2013). However, these models never significantly outperform the standard methods described in (Zens et al., 2002; Koehn et al., 2003).

The n-gram approach in Machine

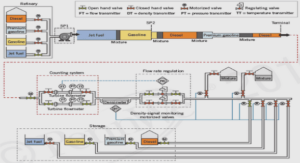

Translation In this section, we describe a variant of the phrase-based approach to Machine Translation that will be used in experiments in the rest of the dissertation. The n-gram based approach described here corresponds to LIMSI’s in-house n-code implementation 3 (Crego et al., 2011) which has been exploited and showed to achieve state-of-the-art results in several translation evaluations, such as the WMT (Workshop on Statistical Machine Translation) and IWSLT (International Workshop on Spoken Language Translation) evaluation campaigns. The n-gram-based approach to SMT borrows its main principle from the finite-state perspective (Casacuberta and Vidal, 2004) in which the translation process is divided in two sub-processes : a source reordering step and a (monotonic) translation step. The source reordering is based on a set of rewrite rules that non-deterministically reorder the input words so as to match the order of the target words (Crego and Mariño, 2006). The application of these rules yields a finite-state graph representing possible source reorderings, which are then used to carry out a monotonic translation from source to target phrases. The main latent variables in this approach is the segmentation of sentence pairs into elementary translation units called tuples, which are equivalent to phrase pairs in the phrase-based approach, and which correspond to pairs of variable-length sequences of source and target words. The n-gram-based approach hence differs from the standard phrase-based approach by the latent variables a that describe the reordering of the source 3ncode.limsi.fr/ s̅ 8 : à t̅ 8: to s̅ 9 : recevoir t̅ 9: receive s̅ 10: le t̅ 10: the s̅ 11 : nobel de la paix t̅ 11: nobel peace s̅ 12: prix t̅ 12: prize u8 u9 u10 u11 u12 s : …. t : …. org : …. à recevoir le prix nobel de la paix …. …. Figure 1.1 – The segmentation of a parallel phrase pair (s, t) into L bilingual tuples (u1, …, uL). The original source sentence (org) is shown above the reordered source sentence s and the target sentence t. Each tuple ui aligns a source phrase si to a target phrase t. sentence, and the joint segmentation into bilingual tuples. 4 Another particularity of the n-gram-based approach is the inclusion of n-gram translation models estimated on atomic tuples.

Acknowledgements |