This manuscript presents an overview of the achievements done during this PhD in the field of Reflective Semiconductor Optical Amplifiers. RSOAs promise to take a key role in the evolution of optical communication networks: despite the development of SOA devices having a sluggish start compared to that of fibre amplifiers, the recent progress in optical semiconductor fabrication techniques and device design has pushed the performance of RSOAs well beyond the state of the art. So RSOA looks like the perfect candidate for the next generation of colourless source in reflective Optical Network Units (ONU) which is the interface between the optical fibre and the customers.

Communication networks have evolved in order to fulfil the growing demand of our bandwidth-hungry world. First, the coaxial cable has replaced the copper cable since 1950 for long- and medium-range communication networks. The Bit rate-distance product (BL) is commonly used as figure of merit for communication systems, where the B is the bit rate (bit/sec) and L is the repeater spacing (km). In coaxial cable, the bandwidth was limited by frequency-dependent cable losses and the best microwave communication system available by 1970 had a BL product of ~100 (Mbit/s)-km.

A suitable medium for transmission needed to be available and optical fibres were selected as the best option to guide the light (since 1966) [1-2]. Then the fibre optic revolution started in 1980 when a fibre with only 4 dB/km attenuation was available. Such low attenuation ushered in optical fibre telecommunication. A radical change occurred, the information was transmitted using pulses of light. Thus further increase in the BL product was possible using this new transmission medium because the physical mechanisms of the frequency-dependent losses are different for copper and optical fibres. Another main challenge appeared: coherent optical sources had to be developed (the laser and SOA evolution is described below).

In order to reach appreciable distances of any fibre-optic communication systems optoelectronic repeaters were used at the beginning. The first fibre optical system enabled a data bit rate of 45 Mb/s over 10 km repeater spacing (compared with 1 km for coaxial systems). This first system was based on GaAs lasers with a wavelength emission at 0.8 µm. The fibre attenuation was a major issue and systems moved gradually towards the lowest-attenuation spectral window. The first shift was to the 1.3 µm wavelength. The losses and dispersion in optical fibres were reduced allowing a transmission at 1.7 Gb/s over 50 km repeater spacing (in 1987). But fibre losses were still the main drawback (~0.5dB/km). It was overcome by using optical systems based on 1.55 µm wavelength however large dispersion was obtained. The solution was to limit the laser spectrum to single mode (SM) and to use zero dispersion-shifted fibre (DSF) [4]. Therefore by 1990, Lightwave systems had bit rate at 10 Gb/s using DSF and SM InGaAsP lasers. Electronic repeater spacing was about 60-70 km for signal regeneration and they were the main limitation for the overall system performances. The problem was solved with the 4th generation of optical systems with the advent of optical fibre amplification. Optical amplifiers were considered as a perfect alternative approach allowing the deployment of optically transparent network and overcoming the electronic bottleneck.

In 1985, the Erbium Doped Fibre Amplifier (EDFA) was invented by Dr. Randy Giles, Professor Emmanuel Desurvire, and Professor David Payne. This optical amplifier was the perfect candidate for online amplification. EDFAs had high gain, low insertion losses, low noise figure and negligible non-linearities [5]. In 1989, EDFAs increased the repeater spacing to 60-80 Km. By 1990, EDFAs became widespread in long-haul lightwave systems. The bit-rate was also increased by the introduction of a new technique: Wavelength-Division Multiplexing (WDM). The use of WDM revolutionized the system capacity since 1992 and in 1996, they were used in the Atlantic and Pacific fibre optic cables (5 Gbit/s was demonstrated over 11,300 Km by using actual submarine cables [6]).

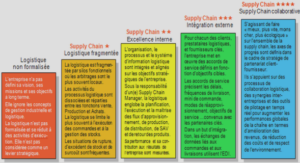

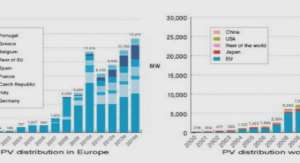

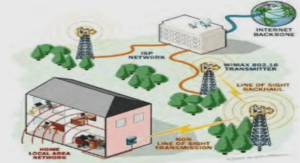

While WDM techniques were mostly used in long-haul systems employing EDFA for online amplification, access networks were using more and more bandwidth. Access network includes the infrastructures used to connect the end users (Optical Network Unit – ONU) to one central office (CO). The CO is connected to the metropolitan or core network. The distance between the two network units is up to 20 km. The evolution of access network was very different from in the core network [7]. High bit rate transmissions are a recent need. At the beginning, it provided a maximum bandwidth of 3 kHz (digitised at 64 kbit/s) for voice transmission and was based on copper cable. Today, a wide range of services need to be carried by our access network and new technologies are introduced which allow flexible and high bandwidth connection. The access network evolution is obvious in Europe with the rapid growth of xDSL technologies (DSL: Digital Subscriber Loop). They enable a broadband connection over a copper cable and allow maintaining the telephone service for that user. In 2000, the maximum bit rate was around 512 kbit/s while today it is around 12 Mbit/s. However since 2005, new applications as video-on-demand need even more bandwidth and the xDSL technologies have reached their limits. The introduction of broadband access network based on FTTx technology is necessary to answer to the recent explosive growth of the internet. Today, Internet service providers propose 100 Mbit/s using optical fibre. The experience from the core network evolution is a great benefit to access network. The use of WDM mature technology in access and metropolitan network should offer more scalability and flexibility for the next generation of optical access network.

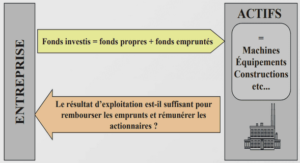

However the cost mainly drives the deployment of access network and remains the principal issue. Cost effective migration is needed and the cost capital expenditures (CAPEX) per customer has to be reduced. ONU directly impacts on the CAPEX. New optical devices are needed in order to obtain high performances and low cost ONU. In this work, WDM passive optical network (PON) based on RSOA is studied. A complete analysis of the access network evolution has been done where GPON (Gigabit Passive Optical Network), BPON (Broadband PON) and EPON (Ethernet PON) upgrading scenario are analysed [8-9].

The development of semiconductor materials allowed the fabrication of high performances devices such as lasers and optical amplifiers. The development of semiconductor amplifier (SOA) followed the development of laser diodes. SOAs have a gain medium as in laser devices but with the suppression of the resonance cavity. The stimulated emission concept itself was introduced by A. Einstein in 1917 . The feasibility of stimulated emission in semiconductor was demonstrated in 1961 [10-11] and in 1962, the first observation of lasing action in semiconductor materials was done by several research groups [12-13]. The first lasers were GaAs homojunction devices operating at low temperature and called Fabry-Perot laser because of the Fabry-Perot cavity (standard cavity with two mirrors which are separated by an amplifying medium). Heterostructure design was proposed in 1963 [14] and demonstrated in 1969 [15]. The arrival of heterostructure devices spurred the investigation on SOAs as they were first considered at the beginning as bad laser. In fact, high threshold current laser were attractive to amplify optical beam when they were operated under the lasing regime. Therefore, these first semiconductor optical amplifiers were called Fabry-Perot SOAs (FP-SOA) and have been deeply investigated [16-17]. In the 1970’s, FP-SOA were not considered seriously as important candidate for optical telecommunication [18-19]. It was due to the low gain (operation below threshold current), high gain ripple and the instability during the amplification process due to the proximity to the threshold condition. In 1980’s, important achievements on facet reflectivity were realized and new type of SOAs appeared. In 1982, J. C. Simon reported the first Travelling wave SOA (TW-SOA) where the facet reflectivity was around 10⁻³ [20]. The previous drawbacks of FP-SOA were overcome. The threshold current was extremely high and allowed 15 dB of gain, the instability was not anymore an issue at relatively high current (~80mA). Development of high quality anti-reflection coating were carried out and the first TW SOA in InGaAsP system with facet reflectivity of 10⁻⁵ was realized in 1986 [21]. First SOAs were AlGaAs based operating in the 830 nm region [22] then InP/InGaAsP SOAs appeared in the late 1980’s [23], centred in the 1.3 µm and 1.5 µm windows. In 1989, Polarization insensitive devices started to become a reality thanks to symmetrical waveguide structure specially designed for SOAs [24]. Prior to this, SOA structures used laser diode design (asymmetrical waveguide structure) leading to strongly polarisation sensitive gain.

After the invention of the EDFA, the research on SOA (more specifically on TW-SOA) was slowed down for application such as the in-line amplification which was the main application at this time. As described in the previous section, EDFAs were chosen as the preferred solution for online amplification in core networks.

New applications were investigated in order to take advantage of the important features of SOAs. These devices could be used as elements for all optical switches and optical crossconnects [25-26]. Highly non-linear phenomena also take place in SOAs and can be used for wavelength conversion and cross gain modulation [27 28]. Other applications were based on SOA as intensity/phase modulators [29-30], logic gate [31-32], clock recovery [33-34-35], dispersion compensator [36-37], format conversion from No Return to Zero (NRZ) to Return to Zero (RZ) [38] and so on…

Introduction |