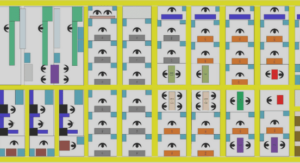

Adaptive Multipath Routing architecture

Our proposed routing architecture, called AMR, consists of an OpenFlow centralized controller based application designed to “discover » topology, calculate multiple paths with maximum flow capacity between nodes, and alter the forwarding table of switches dynamically to set up loop-free multipath forwarding and routing.

A multipath routing module (MRM) is responsible for calculating multiple paths, and sets up those paths using various features of the OpenFlow switch: multiple tables, group entries, and meter entries. Ingress flows from the downstream ports of an edge node are transparently mapped to backbone-level paths with PBB to provide in network multipaths. So, any higher layer, IP L4 TCP or UDP, for example, is transparently forwarded on multipaths by L2 multipath capabilities.

In DCN, networks are expected to operate without interruption, even in the presence of node or link failures. The AMR accomplishes three key tasks: adaptation to link failures, multipath routing computation, and path setup, as follows.

Adaptation to link failures

The AMR adapts to network state changes, such as link up and link down, as follows.

In an OpenFlow-enabled network, a central controller controls all nodes via the OpenFlow protocol. A topology discovery module (TDM) running on top of the controller connects to the OpenFlow switches and automatically discovers the topology by listening to LLDP (IEEE 802.1AB-2009 Link Layer Discovery Protocol (IEEE Standard 802.1AB, 2009)) packets, and then triggers link-up events on link discovery, or link-down events on link failure.

Two threads, called Main and adaptiveRouting, are called at the controller’s multipath routing module (MRM) startup. Three different data structures are used: edgeNodes, a list for storing edge nodes; multiPDict, a five-dimensional (destination (t), source (s), node (u), in port, out port) dictionary for storing outgoing bandwidth weights; and outPortDict, a two-dimensional (node u, node v) dictionary for storing outgoing ports for u-v pairs. The Main thread listens to link events and tracks changes by enqueuing link events in queue Q. The adaptiveRouting thread initially waits for a link event in Q, at which point the procedure dequeues all link events, updates the outPortDict and a topology graph G. It then calls the multiPathRouting(G) procedure, which computes and sets up multipaths according to G by calling the multiPathCompute(G,s,t) and multiPathSetup(t,s) procedures for every s-t pair from the edge nodes discovered. So, G represents a topology when a routing has been calculated. All network changes made during a routing computation will be queued on Q and processed on the next iteration.

Multipath routing computation

The most straightforward algorithms for calculating throughput paths are maximum flow algorithms, Ford-Fulkerson and Edmonds-Karp (Cormen et al., 2009), for example. These algorithms produce a set of links and capacities designed to maximize the aggregated capacity between nodes. In a packet network, however, packets that traverse different paths may reach the receiver in a different order. In this case, the TCP retransmission mechanism, which is based on the packet’s round trip time (RTT), is triggered to recover from the loss. In order to reduce the possibility of out-of-order packet delivery, the length of multiple paths should be considered and the intended path of the flow needs to be maintained. Multiple paths between two nodes can be limited, such that the difference between each path length and the shortest path does not exceed R hops (e.g. R=1). To preserve the intended path of the flow on multiple paths, we have developed an algorithm, based on Edmonds Karp, to compute the outgoing interface rate for each incoming interface on every node on the paths, instead of computing the outgoing interface rate of every node on the paths.

INTRODUCTION |